Make Your Browser Dance

It was a crisp winter’s evening when I pulled up alongside the pier. I stepped out of my car and the bitterly cold sea air hit my face. I walked around to the boot, opened it and heaved out a heavy flight case. I slammed the boot shut, locked the car and started walking towards the venue.

This was it. My first gig. I thought about all those weeks of preparation: editing video clips, creating 3-D objects, making coloured patterns, then importing them all into software and configuring effects to change as the music did; targeting frequency, beat, velocity, modifying size, colour, starting point; creating playlists of these… and working out ways to mix them as the music played.

This was it. This was me VJing.

This was all a lifetime (well a decade!) ago.

When I started web designing, VJing took a back seat. I was more interested in interactive layouts, semantic accessible HTML, learning all the IE bugs and mastering the quirks that CSS has to offer. More recently, I have been excited by background gradients, 3-D transforms, the @keyframe directive, as well as new APIs such as getUserMedia, indexedDB, the Web Audio API

But wait, have I just come full circle? Could it be possible, with these wonderful new things in technologies I am already familiar with, that I could VJ again, right here, in a browser?

Well, there’s only one thing to do: let’s try it!

Let’s take to the dance floor

Over the past couple of years working in The Lab I have learned to take a much more iterative approach to projects than before. One of my new favourite methods of working is to create a proof of concept to make sure my theory is feasible, before going on to create a full-blown product. So let’s take the same approach here.

The main VJing functionality I want to recreate is manipulating visuals in relation to sound. So for my POC I need to create a visual, with parameters that can be changed, then get some sound and see if I can analyse that sound to detect some data, which I can then use to manipulate the visual parameters. Easy, right?

So, let’s start at the beginning: creating a simple visual. For this I’m going to create a CSS animation. It’s just a funky i element with the opacity being changed to make it flash.

See the Pen Creating a light by Rumyra (@Rumyra) on CodePen

A note about prefixes: I’ve left them out of the code examples in this post to make them easier to read. Please be aware that you may need them. I find a great resource to find out if you do is caniuse.com. You can also check out all the code for the examples in this article

Start the music

Well, that’s pretty easy so far. Next up: loading in some sound. For this we’ll use the Web Audio API. The Web Audio API is based around the concept of nodes. You have a source node: the sound you are loading in; a destination node: usually the device’s speakers; and any number of processing nodes in between. All this processing that goes on with the audio is sandboxed within the AudioContext.

So, let’s start by initialising our audio context.

var contextClass = window.AudioContext;

if (contextClass) {

//web audio api available.

var audioContext = new contextClass();

} else {

//web audio api unavailable

//warn user to upgrade/change browser

}Now let’s load our sound file into the new context we created with an XMLHttpRequest.

function loadSound() {

//set audio file url

var audioFileUrl = '/octave.ogg';

//create new request

var request = new XMLHttpRequest();

request.open("GET", audioFileUrl, true);

request.responseType = "arraybuffer";

request.onload = function() {

//take from http request and decode into buffer

context.decodeAudioData(request.response, function(buffer) {

audioBuffer = buffer;

});

}

request.send();

}Phew! Now we’ve loaded in some sound! There are plenty of things we can do with the Web Audio API: increase volume; add filters; spatialisation. If you want to dig deeper, the O’Reilly Web Audio API book by Boris Smus is available to read online free.

All we really want to do for this proof of concept, however, is analyse the sound data. To do this we really need to know what data we have.

Learning the steps

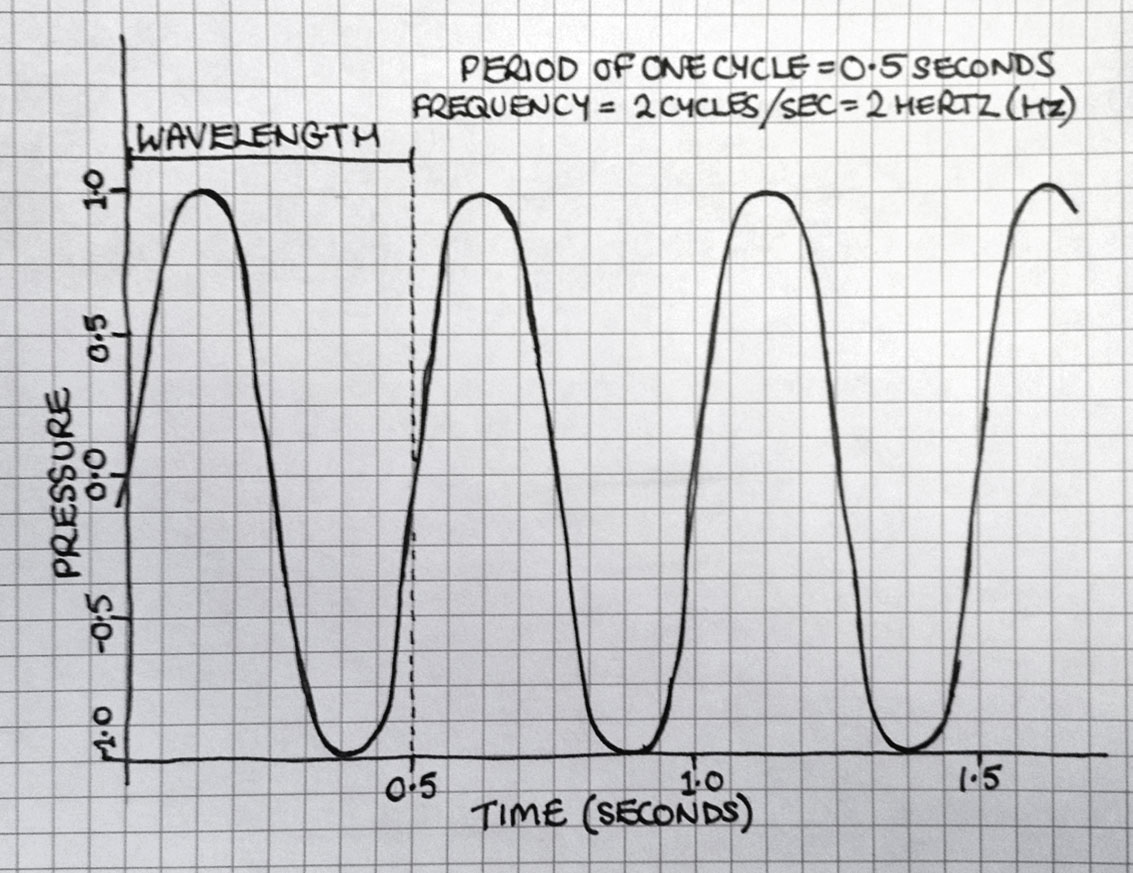

Let’s take a minute to step back and remember our school days and science class. I’m sure if I drew a picture of a sound wave, we would all start nodding our heads.

The sound you hear is caused by pressure differences in the particles in the air. Sound pushes these particles together, causing vibrations. Amplitude is basically strength of pressure. A simple example of change of amplitude is when you increase the volume on your stereo and the output wave increases in size.

This is great when everything is analogue, but the waveform varies continuously and it’s not suitable for digital processing: there’s an infinite set of values. For digital processing, we need discrete numbers.

We have to sample the waveform at set time intervals, and record data such as amplitude and frequency. Luckily for us, just the fact we have a digital sound file means all this hard work is done for us. What we’re doing in the code above is piping that data in the audio context. All we need to do now is access it.

We can do this with the Web Audio API’s analysing functionality. Just pop in an analysing node before we connect the source to its destination node.

function createAnalyser(source) {

//create analyser node

analyser = audioContext.createAnalyser();

//connect to source

source.connect(analyzer);

//pipe to speakers

analyser.connect(audioContext.destination);

}The data I’m really interested in here is frequency. Later we could look into amplitude or time, but for now I’m going to stick with frequency.

The analyser node gives us frequency data via the getFrequencyByteData method.

Don’t forget to count!

To collect the data from the getFrequencyByteData method, we need to pass in an empty array (a JavaScript typed array is ideal). But how do we know how many items the array will need when we create it?

This is really up to us and how high the resolution of frequencies we want to analyse is. Remember we talked about sampling the waveform; this happens at a certain rate (sample rate) which you can find out via the audio context’s sampleRate attribute. This is good to bear in mind when you’re thinking about your resolution of frequencies.

var sampleRate = audioContext.sampleRate;Let’s say your file sample rate is 48,000, making the maximum frequency in the file 24,000Hz (thanks to a wonderful theorem from Dr Harry Nyquist, the maximum frequency in the file is always half the sample rate). The analyser array we’re creating will contain frequencies up to this point. This is ideal as the human ear hears the range 0–20,000hz.

So, if we create an array which has 2,400 items, each frequency recorded will be 10Hz apart. However, we are going to create an array which is half the size of the FFT (fast Fourier transform), which in this case is 2,048 which is the default. You can set it via the fftSize property.

//set our FFT size

analyzer.fftSize = 2048;

//create an empty array with 1024 items

var frequencyData = new Uint8Array(1024);So, with an array of 1,024 items, and a frequency range of 24,000Hz, we know each item is 24,000 ÷ 1,024 = 23.44Hz apart.

The thing is, we also want that array to be updated constantly. We could use the setInterval or setTimeout methods for this; however, I prefer the new and shiny requestAnimationFrame.

function update() {

//constantly getting feedback from data

requestAnimationFrame(update);

analyzer.getByteFrequencyData(frequencyData);

}Putting it all together

Sweet sticks! Now we have an array of frequencies from the sound we loaded, updating as the sound plays. Now we want that data to trigger our animation from earlier.

We can easily pause and run our CSS animation from JavaScript:

element.style.webkitAnimationPlayState = "paused";

element.style.webkitAnimationPlayState = "running";Unfortunately, this may not be ideal as our animation might be a whole heap longer than just a flashing light. We may want to target specific points within that animation to have it stop and start in a visually pleasing way and perhaps not smack bang in the middle.

There is no really easy way to do this at the moment as Zach Saucier explains in this wonderful article. It takes some jiggery pokery with setInterval to try to ascertain how far through the CSS animation you are in percentage terms.

This seems a bit much for our proof of concept, so let’s backtrack a little. We know by the animation we’ve created which CSS properties we want to change. This is pretty easy to do directly with JavaScript.

element.style.opacity = "1";

element.style.opacity = "0.2";So let’s start putting it all together. For this example I want to trigger each light as a different frequency plays. For this, I’ll loop through the HTML elements and change the opacity style if the frequency gain goes over a certain threshold.

//get light elements

var lights = document.getElementsByTagName('i');

var totalLights = lights.length;

for (var i=0; i<totalLights; i++) {

//get frequencyData key

var freqDataKey = i*8;

//if gain is over threshold for that frequency animate light

if (frequencyData[freqDataKey] > 160){

//start animation on element

lights[i].style.opacity = "1";

} else {

lights[i].style.opacity = "0.2";

}

}See all the code in action here. I suggest viewing in a modern browser :)

Awesome! It is true — we can VJ in our browser!

Let’s dance!

So, let’s start to expand this simple example. First, I feel the need to make lots of lights, rather than just a few. Also, maybe we should try a sound file more suited to gigs or clubs.

I don’t know about you, but I’m pretty excited — that’s just a bit of HTML, CSS and JavaScript!

The other thing to think about, of course, is the sound that you would get at a venue. We don’t want to load sound from a file, but rather pick up on what is playing in real time. The easiest way to do this, I’ve found, is to capture what my laptop’s mic is picking up and piping that back into the audio context. We can do this by using getUserMedia.

Let’s include this in this demo. If you make some noise while viewing the demo, the lights will start to flash.

And relax :)

There you have it. Sit back, play some music and enjoy the Winamp like experience in front of you.

So, where do we go from here? I already have a wealth of ideas. We haven’t started with canvas, SVG or the 3-D features of CSS. There are other things we can detect from the audio as well. And yes, OK, it’s questionable whether the browser is the best environment for this. For one, I’m using a whole bunch of nonsensical HTML elements (maybe each animation could be held within a web component in the future). But hey, it’s fun, and it looks cool and sometimes I think it’s OK to just dance.

About the author

Ruth John is an award nominated digital artist, web consultant, keynote speaker and writer, with 15 years of experience in the digital industry.

She’s a Google Developer Expert, is on the board of directors for Bath Digital Festival and in her career has worked for companies such as o2 (Telefonica) and BSkyB, and clients such as the BBC, NBC, Cancer Research UK, Heineken and Channel 4 among others.

Ruth also belongs to the { Live : JS } collective, which brings audio and visual code to life, in live performances across the globe