Christmas Gifts for Your Future Self: Testing the Web Platform

In the last year I became a CSS specification editor, on a mission to revitalise CSS Multi-column layout. This has involved learning about many things, one of which has been the Web Platform Tests project. In this article, I’m going to share what I’ve learned about testing the web platform. I’m also going to explain why I think you might want to get involved too.

Why test?

At one time or another it is likely that you have been frustrated by an issue where you wrote some valid CSS, and one browser did one thing with it and another something else entirely. Experiences like this make many web developers feel that browser vendors don’t work together, or they are actively doing things in a different way to one another to the detriment of those of us who use the platform. You’ll be glad to know that isn’t the case, and that the people who work on browsers want things to be consistent just as much as we do. It turns out however that interoperability, which is the official term for “works in all browsers”, is hard.

Thanks to web-platform-tests, a test from another browser vendor just found genuine bug in our code before we shipped 😻

— Brian Birtles (@brianskold) February 10, 2017

In order for W3C Specifications to move on to become W3C Recommendations we need to have interoperable implementations.

6.2.4 Implementation Experience

Implementation experience is required to show that a specification is sufficiently clear, complete, and relevant to market needs, to ensure that independent interoperable implementations of each feature of the specification will be realized. While no exhaustive list of requirements is provided here, when assessing that there is adequate implementation experience the Director will consider (though not be limited to):

https://www.w3.org/2017/Process-20170301/#transition-reqs

- is each feature of the current specification implemented, and how is this demonstrated?

- are there independent interoperable implementations of the current specification?

- are there implementations created by people other than the authors of the specification?

- are implementations publicly deployed?

- is there implementation experience at all levels of the specification’s ecosystem (authoring, consuming, publishing…)?

- are there reports of difficulties or problems with implementation?

We all want interoperability, achieving interoperability is part of the standards process. The next question is, how do we make sure that we get it?

Unimplemented vs uninteroperable implementations

Before looking at how we can try to improve interoperability, I’d like to look at the reasons we don’t always meet that aim. There are a couple of reasons why browser X is not doing the same thing as browser Y.

The first reason is that browser X has not implemented that feature yet. Relatively small teams of people work on browser engines, and their resources are spread as thinly as those of any company. Behind those browsers are business or organisational goals which may not match our desire for a shiny feature to be made available. There are ways in which we as the web community can help gently encourage implementations - by requesting the feature, by using it so it shows up in usage reports, or writing about it to show interest. However, there will always be some degree of lag based on priorities.

A browser not supporting a feature at all, is reasonably easy to deal with these days. We can test for support with Feature Queries, and create sensible fallbacks. What is harder to deal with is when a feature is implemented in different ways by different browsers. In that situation you use the feature, perhaps referring to the specification to ensure that you are writing your CSS correctly. It looks exactly as you expect in one browser and it’s all broken when you test in another.

A frequent cause of this kind of issue is that the specification is not well defined in a particular area or that the specification has changed since one or other browser implemented it. CSS specifications are not developed in a darkened room, then presented to browser vendors to implement as a completed document. It would be nice if it worked like that, however the web platform is a gnarly thing. Before we can be sure that a specification is correct, it needs implementing in order that we can get the interoperable implementations I described earlier. A circular process has to happen. Specifications have to be written, browsers have to implement and find the problems, and then the specification has to be revised.

Many people reading this will be familiar with how flexbox changed three times in browsers, leaving us with a mess of incompatibilities and the need to use at least two versions of the spec. This story was an example of this circular process, in this case the specification was flagged as experimental using vendor prefixes. We had become used to using vendor prefixes in production and early adopters of flexbox were bitten by this. Today, specifications are developed behind experimental flags as we saw with CSS Grid Layout. Yet there has to come a time when implementations ship, and remove those flags, and it may be that knowingly or unknowingly some interop issues slip through.

You will know these interop issues as “browser bugs”, perhaps you have even reported one (thank you!) and none of us want them, so how do we make the platform as robust as possible?

How do we ensure we have interoperability?

If you were working on a large web application, with several people committing code, it would be very easy for one person to make a change that inadvertently broke some part of the application. They might not realise the fact that their change would cause a problem, due to not having a complete understanding of the entire codebase. To prevent this from happening, it is accepted good practice to write tests as well as code. The tests can then be run before the application is deployed.

Unless you start out from the beginning writing tests, and are very good at writing a test for every bit of code, it is likely that some issues do slip through from time to time. When this happens, a good approach is to not only fix the issue but also to write a test that would stop it ever happening again. That way the test suite improves over time and hopefully fewer issues happen. The web platform is essentially a giant, sprawling application, with a huge range of people working on it in different ways. There is therefore plenty of opportunity for issues to creep in, so it seems like having some way of writing tests and automating those tests on browsers would be a good thing. That, is what the Web Platform Tests project has set out to achieve.

Web Platform Tests

Web Platform Tests is the test suite for the web platform. It is set of tests for all parts of the web platform, which can be run in any browser and the results reported. This article mostly discusses CSS tests, because I work on CSS. You will find that there are tests covering the full platform, and you can look into whichever area you have the most interest and experience in.

If we want to create a test suite for a CSS specification then we need to ensure that every feature of the specification has a related test. If a change is made to the spec, and a test committed that reflects that change, then it should be straightforward to run that test against each browser and see if it passes.

Currently, at the CSS Working Group, specifications that are at Candidate Recommendation Status should commit at test with every normative change to the spec. To explain the terminology, a normative change is one that changes some of the normative text of a specification - text that contains instructions as to how a browser should render a certain thing. A Candidate Recommendation is the status at which the Working Group officially request implementations of the spec, therefore it is reasonable to assume that any change may cause an interoperability issue. It is usually the case that representatives from all browsers will have discussed the change, so anyone who needs to change code will be aware. In this case the test allows them to check that their change passes and matches everyone else. Tests would also highlight the situation where a change to the spec caused an issue in a browser that otherwise wouldn’t be aware if it. Just as a test suite for your web application should alert a person committing code, that their change will cause a problem elsewhere.

Discovering the tests

I’ve found that the more I have understood the effort that goes into interoperability, and the reasons why creating an interoperable web is so hard, running into browser issues has become less frustrating. I have somewhere to go, even if all I can do is log the bug.

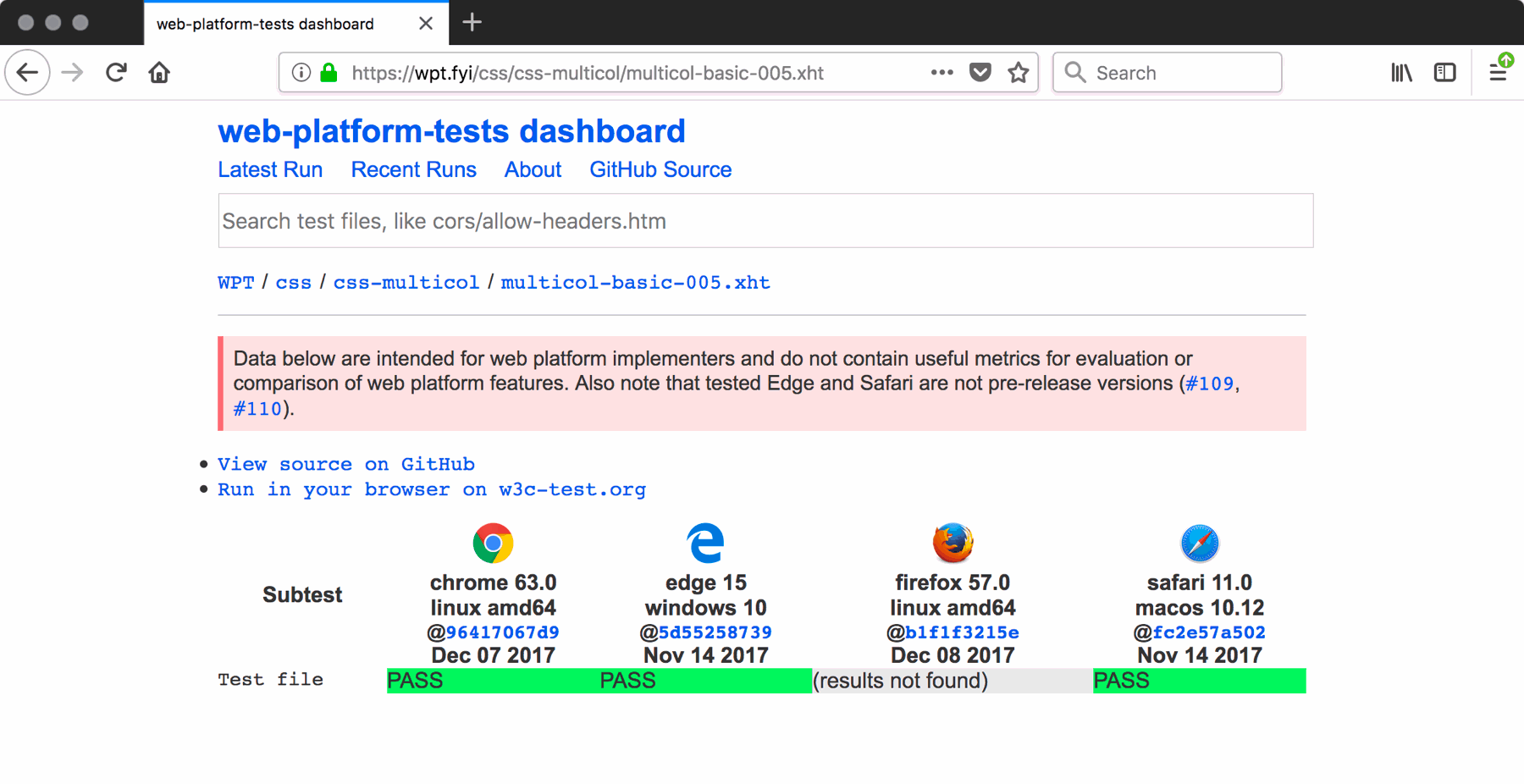

If you are even slightly interested in the subject, go have a poke around wpt.fyi. You can explore the various parts of the web platform and see how many tests have been committed. All the the CSS tests are under the directory /css where you will find each specification. Find a specification you are interested in, and look at the tests. Each test has a link to run it in your own browser to see if it passes. This can be useful in itself, if you are battling with an issue and have reduced it down to something specific, you can go and look to see if there is a test covering that and whether it appears to pass or fail in the browser you are battling with. If it turns out that the test fails, it’s probably not you!

Note: In some tests you will come across mention of a font called Ahem. This is a font designed for testing which contains consistent glyphs. You can read about how to use the font and download it here.

Contributing to Web Platform Tests

You can also become involved with Web Platform Tests. People often ask me how they can become involved in CSS, and I can think of no better way than by writing tests. You need to really understand a feature to accurately come up with a method of testing if it works or not in the different engines. This is not glamorous work, it is however a very useful thing to be involved with. In addition to helping yourself, and developing the sort of deep knowledge of the platform that enables contribution, you will really help the progress of specifications. There are only a very few people writing specs. If those people also have to write and review all of the tests it slows down their work. If you want a better, more interoperable web, and to massively improve your ability to have nerdy conversations about highly specific things, testing is the place to start.

A local testing setup

Your first stop should be to visit the home of Web Platform Tests. There is some documentation here, which does tend to assume you know about the tests and what you are looking for - having read this article you know as much as I do. To be able to work on tests you will want to:

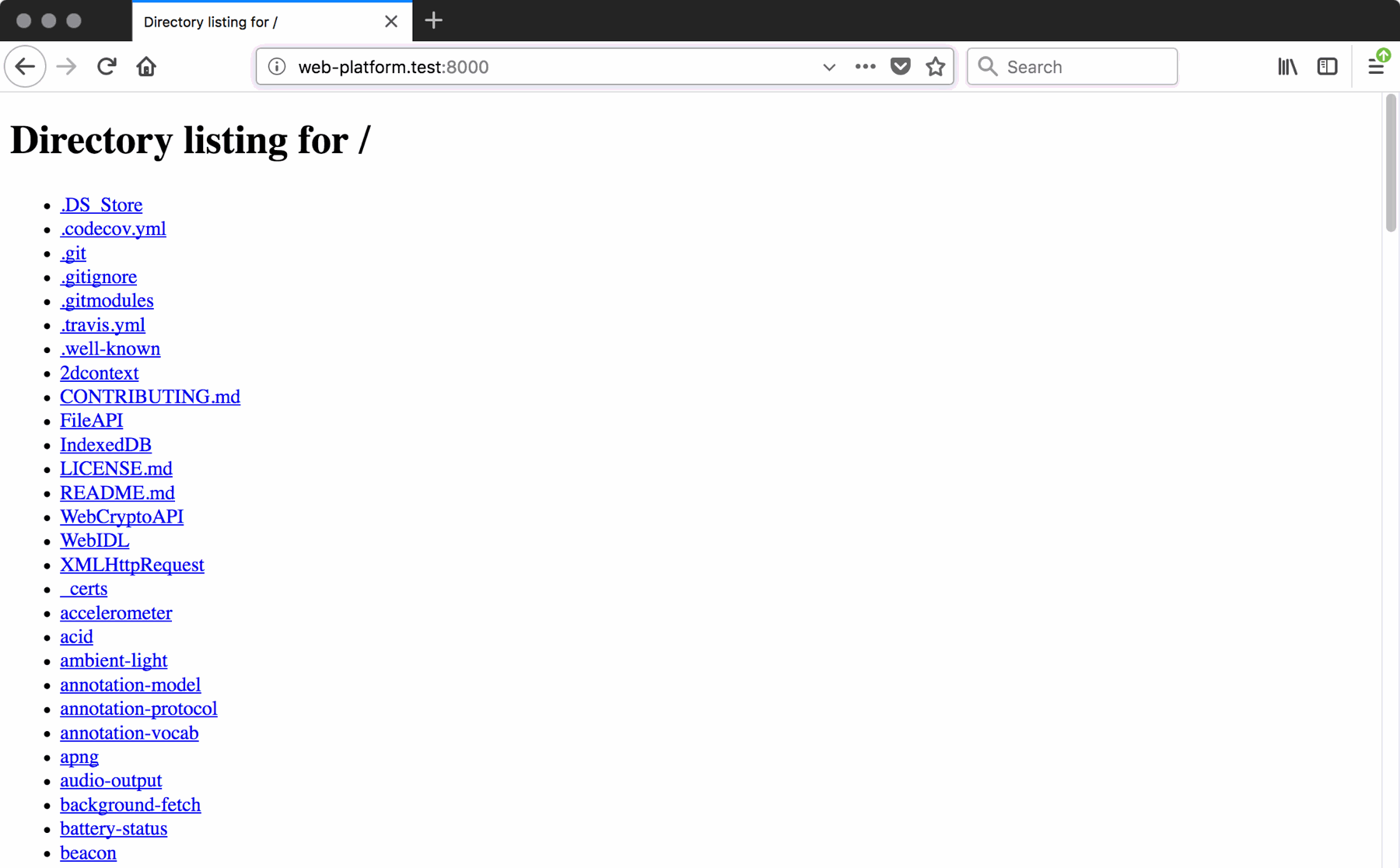

- Clone the WPT repo, this is where all the tests are stored

- Install some tools so you can run up a local copy of the tests

The instructions on the Readme in the repo should get you up and running, you can then load your own version of the test suite in a browser at http://web-platform-test:8000, or whichever port you set up.

Finding things to test

It’s currently not straightforward to locate low-hanging fruit in order to start committing tests. There are some issues flagged up as a good first issue in the GitHub repo, if any of those match your interest and knowledge. Otherwise, a good place to start is where you know of existing interoperability issues. If you are aware of a browser bug, have a look and see if there is a test that addresses it. If not, then a test highlights the interoperability issue, and if it is an issue that you are running into means that you have a nice way to see if it has been fixed!

Talk to people

There is an IRC channel at irc://irc.w3.org:6667/testing, where you will find people who are writing tests as well as people who are working on the test suite framework itself. They have always been very friendly, and are likely to welcome people with a real interest in creating tests.

Gathering information

First you need to read the spec. To be able to create a test you need to know and to understand what the specification says should be happening. As I mentioned, writing tests will improve your knowledge dramatically! In general I find that web developers assume their favourite browser has got it right, this isn’t about right or wrong however, or good browsers versus bad ones. The browser with the incorrect implementation may have had a perfect, as per the spec implementation, until something changed. Do some investigation and work out what the spec says, and which – if any – browser is doing it correctly.

Another good place to look when trying to create a test for an interop issue, is to look at the browser issue trackers. It is quite likely that someone has already logged the issue, and detailed what it is, and even which browsers are as per the spec. This is useful information, as you then have a clue as to which browsers should pass your test. Remember to check version numbers - an issue may well be fixed in a pre-release version of Chrome for example, but not in the public release.

Writing the test

If you’ve ever created a Reduced Test Case to isolate a browser issue, you already have some idea of what we are trying to do with a test. We want to test one thing, in isolation, and to be able to confirm “yes this works as per the spec” or “no, this does not”. The main two types of test are:

testharness.jstests- reftests

The testharness.js tests use JavaScript to test an assertion, this framework is designed as a way to test Web APIs and as this quickly gets fairly complicated - and I’m a complete beginner myself at writing these - I’ll refer you to the excellent tutorial Using testharness.js.

Many CSS tests will be reftests. A reftest involves getting two pages to lay out in the same way, so that they are visually the same. For example, you can find a reftest for Grid Layout at:https://w3c-test.org/css/css-grid/alignment/grid-gutters-001.html or at http://web-platform.test:8000/css/css-grid/alignment/grid-gutters-001.html if you have run up your own copy of WPT.

<!DOCTYPE html>

<meta charset="utf-8">

<title>CSS Grid Layout Test: Support for gap shorthand property of row-gap and column-gap</title>

<link rel="help" href="https://www.w3.org/TR/css-grid-1/#gutters">

<link rel="help" href="https://www.w3.org/TR/css-align-3/#gap-shorthand">

<link rel="match" href="../reference/grid-equal-gutters-ref.html">

<link rel="author" title="Rachel Andrew" href="mailto:me@rachelandrew.co.uk">

<style>

#grid {

display: grid;

width:200px;

gap: 20px;

grid-template-columns: 90px 90px;

grid-template-rows: 90px 90px;

background-color: green;

}

#grid > div {

background-color: silver;

}

</style>

<p>The test passes if it has the same visual effect as reference.</p>

<div id="grid">

<div></div>

<div></div>

<div></div>

<div></div>

</div>I am testing the new gap property (renamed grid-gap). The reference file can be found by looking for the line:

<link rel="match" href="../reference/grid-equal-gutters-ref.html">In that file, I am using absolute positioning to mock up the way the file would look if gap is implemented correctly.

<!DOCTYPE html>

<meta charset="utf-8">

<title>CSS Grid Layout Reference: a square with a green cross</title>

<link rel="author" title="Rachel Andrew" href="mailto:me@rachelandrew.co.uk" />

<style>

#grid {

width:200px;

height: 200px;

background-color: green;

position: relative;

}

#grid > div {

background-color: silver;

width: 90px;

height: 90px;

position: absolute;

}

#grid :nth-child(1) {

top: 0;

left: 0;

}

#grid :nth-child(2) {

top: 0;

left: 110px;

}

#grid :nth-child(3) {

top: 110px;

left: 0;

}

#grid :nth-child(4) {

top: 110px;

left: 110px;

}

</style>

<div id="grid">

<div></div>

<div></div>

<div></div>

<div></div>

</div>The tests are compared in an automated way by taking screenshots of the test and reference. These are relatively simple tests to write, you will find the work is not in writing the test however. The work is really in doing the research, and making sure you understand what is supposed to happen so you can write the test. Which is why, if you really want to get your hands dirty in the web platform, this is a good place to start.

Committing a test

Once you have written a test you can run the lint tool to make sure that everything is tidy. This tool is run automatically after you submit your pull request, and reviewers won’t accept a test with lint errors, so do this locally first to catch anything obvious.

Tests are added as a pull request, once you have your test ready to go you can create a pull request to add it to the repository. Your test will be tested and it will then wait for a review.

You may well then find yourself in a bit of a waiting game, as the test needs to be reviewed. How long that takes will depend on how active work is on that spec. People who are in the OWNERS file for that spec should be notified. You can always ask in IRC to see if someone is available who can look at and potentially merge your test.

Usually the reviewer will have some comments as to how the test can be improved, in the same as the owner of an open source project you submit a PR to might ask you to change some things. Work with them to make your test as good as it can be, the things you learn on the first test you submit will make future ones easier. You can then bask in the glow of knowing you have done something towards the aim of a more interoperable web for all of us.

Christmas gifts for your future self

I have been a web developer for over 20 years. I have no idea what the web platform will look like in 20 more years, but for as long as I’m working on it I’ll keep on trying to make it better. Making the web more interoperable makes it a better place to be a web developer, storing up some Christmas gifts for my future self, while learning new things as I do so.

Resources

I rounded up everything I could find on WPT while researching this article. As well as some other links that might be helpful for you. These links are below. Happy testing!

- Web Platform Tests

- Using testharness.js

- IRC Channel irc://irc.w3.org:6667/testing

- Edge Issue Tracker

- Mozilla Issue Tracker

- WebKit Issue Tracker

- Chromium Issue Tracker

- Reducing an Issue - guide to created a reduced test case

- Effectively Using Web Platform Tests: Slides and Video

- An excellent walkthrough from Lyza Gardner on her working on tests for the HTML specification - Moving Targets: a case study on testing web standards.

- Improving interop with web-platform-tests: Slides and Video

About the author

Rachel Andrew is a Director of edgeofmyseat.com, a UK web development consultancy and creators of the small content management system, Perch; a W3C Invited Expert to the CSS Working Group; and Editor in Chief of Smashing Magazine. She is the author of a number of books including The New CSS Layout for A Book Apart and a Google Developer Expert for Web Technologies.

She curates a popular email newsletter on CSS Layout, and is passing on her layout knowledge over at her CSS Layout Workshop.

When not writing about business and technology on her blog at rachelandrew.co.uk or speaking at conferences, you will usually find Rachel running up and down one of the giant hills in Bristol, or attempting to land a small aeroplane while training for her Pilot’s license.