Websites of Christmas Past, Present and Future

The websites of Christmas past

The first website was created at CERN. It was launched on 20 December 1990 (just in time for Christmas!), and it still works today, after twenty-four years. Isn’t that incredible?!

Why does this website still work after all this time? I can think of a few reasons.

First, the authors of this document chose HTML. Of course they couldn’t have known back then the extent to which we would be creating documents in HTML, but HTML always had a lot going for it. It’s built on top of plain text, which means it can be opened in any text editor, and it’s pretty readable, even without any parsing.

Despite the fact that HTML has changed quite a lot over the past twenty-four years, extensions to the specification have always been implemented in a backwards-compatible manner. Reading through the 1992 W3C document HTML Tags, you’ll see just how it has evolved. We still have h1 – h6 elements, but I’d not heard of the <plaintext> element before. Despite being deprecated since HTML2, it still works in several browsers. You can see it in action on my website.

As well as being written in HTML, there is no run-time compilation of code; the first website simply consists of HTML files transmitted over the web. Due to its lack of complexity, it stood a good chance of surviving in the turbulent World Wide Web.

That’s all well and good for a simple, static website. But websites created today are increasingly interactive. Many require a login and provide experiences that are tailored to the individual user. This type of dynamic website requires code to be executed somewhere.

Traditionally, dynamic websites would execute such code on the server, and transmit a simple HTML file to the user. As far as the browser was concerned, this wasn’t much different from the first website, as the additional complexity all happened before the document was sent to the browser.

Doing it all in the browser

In 2003, the first single page interface was created at slashdotslash.com. A single page interface or single page app is a website where the page is created in the browser via JavaScript. The benefit of this technique is that, after the initial page load, subsequent interactions can happen instantly, or very quickly, as they all happen in the browser.

When software runs on the client rather than the server, it is often referred to as a fat client. This means that the bulk of the processing happens on the client rather than the server (which can now be thin).

A fat client is preferred over a thin client because:

- It takes some processing requirements away from the server, thereby reducing the cost of servers (a thin server requires cheaper, or fewer servers).

- They can often continue working offline, provided no server communication is required to complete tasks after initial load.

- The latency of internet communications is bypassed after initial load, as interactions can appear near instantaneous when compared to waiting for a response from the server.

But there are also some big downsides, and these are often overlooked:

- They can’t work without JavaScript. Obviously JavaScript is a requirement for any client-side code execution. And as the UK Government Digital Service discovered, 1.1% of their visitors did not receive JavaScript enhancements. Of that 1.1%, 81% had JavaScript enabled, but their browsers failed to execute it (possibly due to dropping the internet connection). If you care about 1.1% of your visitors, you should care about the non-JavaScript experience for your website.

- The browser needs to do all the processing. This means that the hardware it runs on needs to be fast. It also means that we require all clients to have largely the same capabilities and browser APIs.

- The initial payload is often much larger, and nothing will be rendered for the user until this payload has been fully downloaded and executed. If the connection drops at any point, or the code fails to execute owing to a bug, we’re left with the non-JavaScript experience.

- They are not easily indexed as every crawler now needs to run JavaScript just to receive the content of the website.

These are not merely edge case issues to shirk off. The first three issues will affect some of your visitors; the fourth affects everyone, including you.

What problem are we trying to solve?

So what can be done to address these issues? Whereas fat clients solve some inherent issues with the web, they seem to create as many problems. When attempting to resolve any issue, it’s always good to try to uncover the original problem and work forwards from there. One of the best ways to frame a problem is as a user story. A user story considers the who, what and why of a need. Here’s a template:

As a {who} I want {what} so that {why}

I haven’t got a specific project in mind, so let’s refer to the who as user. Here’s one that could explain the use of thick clients.

As a user I want the site to respond to my actions quickly so that I get immediate feedback when I do something.

This user story could probably apply to a great number of websites, but so could this:

As a user I want to get to the content quickly, so that I don’t have to wait too long to find out what the site is all about or get the content I need.

A better solution

How can we balance both these user needs? How can we have a website that loads fast, and also reacts fast? The solution is to have a thick server, that serves the complete document, and then a thick client, that manages subsequent actions and replaces parts of the page. What we’re talking about here is simply progressive enhancement, but from the user’s perspective.

The initial payload contains the entire document. At this point, all interactions would happen in a traditional way using links or form elements. Then, once we’ve downloaded the JavaScript (asynchronously, after load) we can enhance the experience with JavaScript interactions. If for whatever reason our JavaScript fails to download or execute, it’s no biggie – we’ve already got a fully functioning website. If an API that we need isn’t available in this browser, it’s not a problem. We just fall back to the basic experience.

This second point, of having some minimum requirement for an enhanced experience, is often referred to as cutting the mustard, first used in this sense by the BBC News team. Essentially it’s an if statement like this:

if('querySelector' in document

&& 'localStorage' in window

&& 'addEventListener' in window) {

// bootstrap the JavaScript application

}This code states that the browser must support the following methods before downloading and executing the JavaScript:

document.querySelector(can it find elements by CSS selectors)window.localStorage(can it store strings)window.addEventListener(can it bind to events in a standards-compliant way)

These three properties are what the BBC News team decided to test for, as they are present in their website’s JavaScript. Each website will have its own requirements. The last method, window.addEventListener is in interesting one. Although it’s simple to bind to events on IE8 and earlier, these browsers have very inconsistent support for standards. Making any JavaScript-heavy website work on IE8 and earlier is a painful exercise, and comes at a cost to all users on other browsers, as they’ll download unnecessary code to patch support for IE.

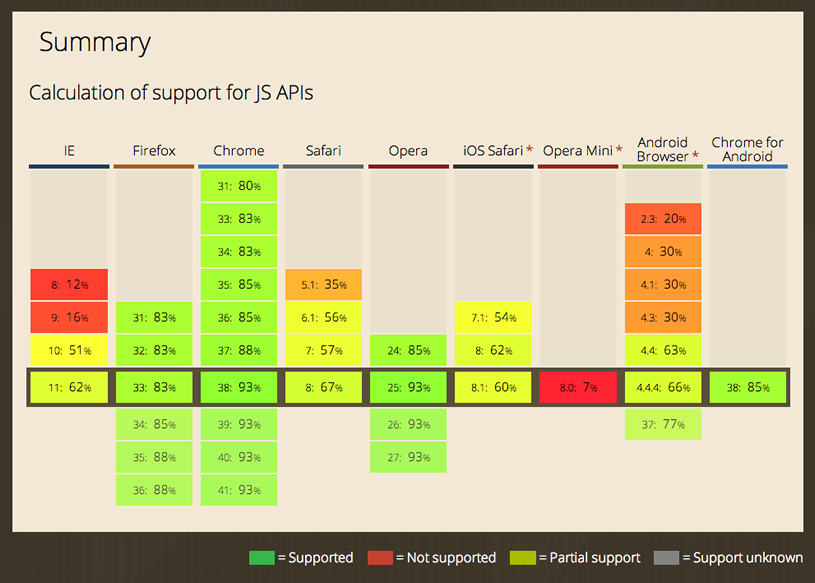

JavaScript API support by browser.

JavaScript API support by browser.

I discovered that IE8 supports 12% of the current JavaScript APIs, while IE9 supports 16%, and IE10 51%. It seems, then, that IE10 could be the earliest version of IE that I’d like to develop JavaScript for. That doesn’t mean that users on browsers earlier than 10 can’t use the website. On the contrary, they get the core experience, and because it’s just HTML and CSS, it’s much more likely to be bug-free, and could even provide a better experience than trying to run JavaScript in their browser. They receive the thin client experience.

By reducing the number of platforms that our enhanced JavaScript version supports, we can better focus our efforts on those platforms and offer an even greater experience to those users. But we can only do that if we use progressive enhancement. Otherwise our website would be completely broken for all other users.

So what we have is a thick server, capable of serving the entire website to our users, complete with all core functionality needed for our users to complete their tasks; and we have a thick client on supported browsers, which can bring an even greater experience to those users.

This is all transparent to users. They may notice that the website seems snappier on the new iPhone they received for Christmas than on the Windows 7 machine they got five years ago, but then they probably expected it to be faster on their iPhone anyway.

Isn’t this just more work?

It’s true that making a thick server and a thick client is more work than just making one or the other. But there are some big advantages:

- The website works for everyone.

- You can decide when users get the enhanced experience.

- You can enhance features in an iterative (or agile) manner.

- When the website breaks, it doesn’t break down.

- The more you practise this approach, the quicker you will become.

The websites of Christmas present

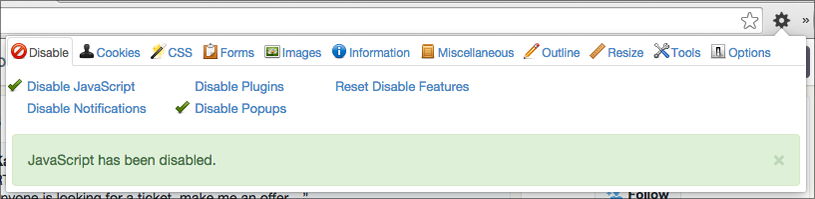

The best way to discover websites using this technique of progressive enhancement is to disable JavaScript and see if the website breaks. I use the Web Developer extension, which is available for Chrome and Firefox. It lets me quickly disable JavaScript.

Web Developer extension.

Web Developer extension.

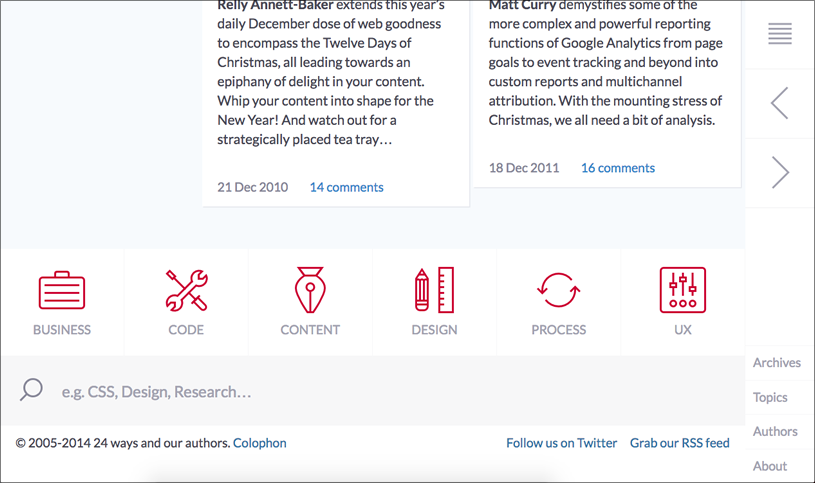

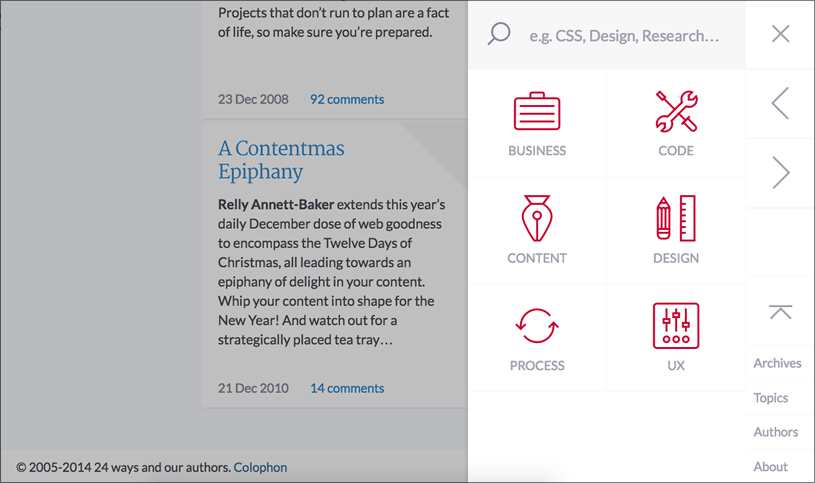

24 ways works with and without JavaScript. Try using the menu icon to view the navigation. Without JavaScript, it’s a jump link to the bottom of the page, but with JavaScript, the menu slides in from the right.

24 ways navigation with JavaScript disabled.

24 ways navigation with JavaScript disabled.

24 ways navigation with working JavaScript.

24 ways navigation with working JavaScript.

Google search will also work without JavaScript. You won’t get instant search results or any prerendering, because those are enhancements.

For a more app-like example, try using Twitter. Without JavaScript, it still works, and looks nearly identical. But when you load JavaScript, links open in modal windows and all pages are navigated much quicker, as only the content that has changed is loaded. You can read about how they achieved this in Twitter’s blog posts Improving performance on twitter.com and Implementing pushState for twitter.com.

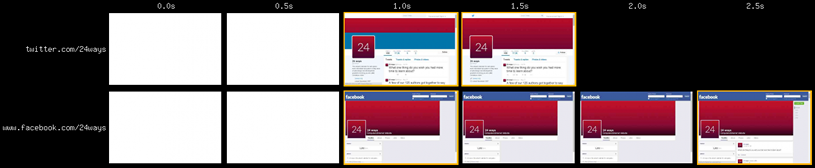

Unfortunately Facebook doesn’t use progressive enhancement, which not only means that the website doesn’t work without JavaScript, but it takes longer to load. I tested it on WebPagetest and if you compare the load times of Twitter and Facebook, you’ll notice that, despite putting similar content on the page, Facebook takes two and a half times longer to render the core content on the page.

Facebook takes two and a half times longer to load than Twitter.

Facebook takes two and a half times longer to load than Twitter.

Websites of Christmas yet to come

Every project is different, and making a website that enjoys a long life, or serves a larger number of users may or may not be a high priority. But I hope I’ve convinced you that it certainly is possible to look to the past and future simultaneously, and that there can be significant advantages to doing so.

About the author

Josh Emerson is a front end developer working at Mendeley. He cares about progressive enhancement and enjoys challenging assumptions about user needs and device capabilities.